Understanding User Perceptions of AI

Client

Portfolio Project

Year

2026

Services

User Research AI Survey Design Python

Project Overview

Goal: Explore how people understand, use, and trust AI systems in everyday life while demonstrating user research and AI/technical skills.

Key points:

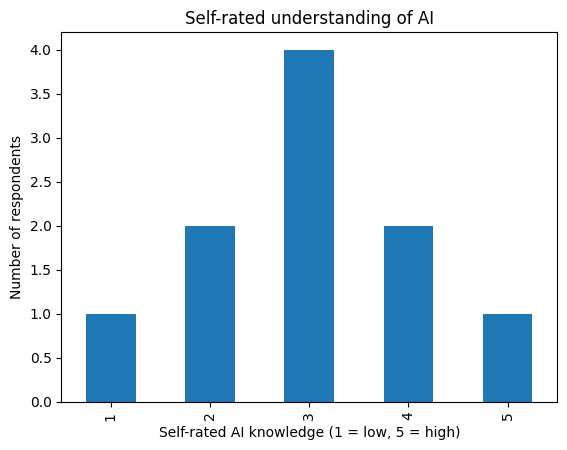

- Small-scale survey (n=10) with rating scales, multiple choice, and open-ended questions.

- Used Python (pandas + matplotlib) to clean, analyze, and visualize data.

- Combined quantitative and qualitative analysis to uncover patterns in trust, knowledge, and behaviour.

The "Why"

As a user researcher, I want to expand and grow my skills by exploring AI, which is not just a trend but a transformative technology. I aimed to get ahead and show I can integrate AI understanding into research practice, producing insights that are both actionable and human-centered.

I decided the best way to do this would be to create a survey myself, gather the data and use Python and ChatGPT to help find insights. Not having any Python skills prior to starting this project, I took an intro to Python course to set myself up.

Methods

Survey Design:

- Measured AI knowledge (1–5 scale) and trust in AI outputs (1–5 scale).

- Explored open-ended experiences with AI errors, context issues, and ethical concerns.

- Combined quantitative and qualitative questions to provide a holistic view.

Data Analysis:

- Python / pandas: Data cleaning, column renaming, numeric conversions.

- Matplotlib: Bar charts, scatter plots, and descriptive visualizations.

- Manual thematic analysis: Identified recurring qualitative themes, validated via keyword frequency.

Key Insights

Knowledge & Usage Patterns

- Participants fell into these groups:

- Low Awareness

- Limited knowledge, cautious use of AI

- Emerging Learners

- Some knowledge, keen to learn more

- Daily use, often for professional/business purposes

Trust & AI Errors

- Trust correlates with knowledge: correlation = 0.72

- Open-ended insights revealed:

- Hallucinations / errors: weird titles, fabricated citations

- Fact-checking behaviour: participants verify AI outputs

- Contextual misunderstanding: AI misinterprets intent

- Bias / ethical concerns: e.g., image generation altered content without consent

.png)

Human-Driven Insights

- Automated keyword analysis supported themes but did not fully capture nuanced insights.

- Manual thematic coding highlighted ethical and behavioural subtleties.

- Shows ability to combine human judgment with technical tools, a key skill for AI-focused research.

Next Steps / Product Implications

If this was a real project these would be the recommendations that I would feed back to the team.

- Develop educational resources for AI literacy.

- Improve AI output reliability and mitigate hallucinations.

- Explore trust-building mechanisms in AI interfaces.

- Expand survey to larger, more diverse samples for broader insights.

What I Learned

People’s AI knowledge affects how much they trust outputs, but they still fact-check and notice errors. Learning and applying Python allowed me to clean, analyze, and visualize survey data, giving me confidence in my technical skills. Combining this with qualitative review provided richer insights, and the project highlighted opportunities for future research, like exploring larger datasets, designing AI literacy interventions, or testing trust-building features in AI products.